The New Colonialism: Power, Data, and the Transformation of Human Experience - Tripp Fuller

Homebrewed Christianity Podcast with Tripp Fuller

The Digital Frontier: Where Power Becomes Invisible

When you woke up this morning, you likely checked your phone before doing anything else. This simple gesture – this daily genuflection to our digital devices – reveals more about our current moment than we might imagine. Just as medieval Christians oriented their lives around the church tower's bells, we now orient ourselves around notification pings and social media updates. But unlike those church bells, which called communities together in physical space, our digital rituals often pull us into vast territories of data extraction where our very experiences become commodities.

To understand the scale of this transformation, consider that Facebook's user base now exceeds the population of any single country in human history. Google processes over 3.5 billion searches every day – more than the number of prayers ever uttered in all the world's temples and churches combined. These aren't just impressive statistics; they represent unprecedented control over human attention and interaction.

The tech giants that control these digital territories present themselves as neutral facilitators of human connection – simply providing digital versions of familiar public spaces like town squares or community centers. But this comforting narrative masks a more troubling reality that we must understand if we hope to reclaim our digital future.

The Myth of Digital Public Spaces: Understanding What We've Lost

Let me take you back to a moment in history that illuminates our present situation. In its early days, the internet was envisioned as something radically different from what it has become. It emerged from a unique fusion of military pragmatism and countercultural idealism – a publicly funded network imagined as a tool for human freedom and cognitive enhancement. Those early pioneers dreamed of a decentralized space where information could flow freely, uncontrolled by any single authority.

But over three decades, we've witnessed what scholars call a "triple revolution": the commercialization of the internet, the rise of mobile devices that keep us constantly connected, and the emergence of social media platforms that mediate our relationships. This transformation has fundamentally altered the nature of digital space in ways that undermine genuine human connection.

To understand why this matters, imagine gathering with friends in two different settings. In a physical space – say, a local tap room – your interactions exist only in the moment and in the memories of those present. But when you gather on a digital platform, every laugh, every shared story, every moment of connection generates hundreds of data points. The platform records not just what you say, but how long you look at each post, what emotions you express, who you interact with, and what you do next. This continuous data capture transforms social interaction into a resource to be extracted and monetized.

This creates what scholars call "asymmetrical data relations" – a dynamic that would be immediately obvious as dystopian in physical space. Imagine sitting on a barstool where one entity could see everything everyone does, knows what they're thinking, predicts what they'll do next, but reveals nothing about itself. This is exactly how digital platforms operate, creating what ethicists have termed "surveillance asymmetry" – they see everything we do, while we see only what they choose to show us.

But the transformation goes deeper than surveillance. Unlike physical spaces where we naturally gravitate toward friends and interests, digital platforms actively curate our social experiences through algorithms. These unseen systems decide whose posts we see, which news we encounter, even which friends appear in our feeds. It's as if an invisible hand constantly rearranges the furniture and guests at a dinner party based on what will keep people talking – and generating data – the longest.

The Four-X Model: How Digital Empires Expand

This transformation of social spaces into data territories doesn't happen by accident. It follows a systematic pattern that eerily mirrors historical colonial expansion – what scholars call the "Four-X Model" of digital conquest. Understanding this pattern helps us see how platforms methodically colonize human experience.

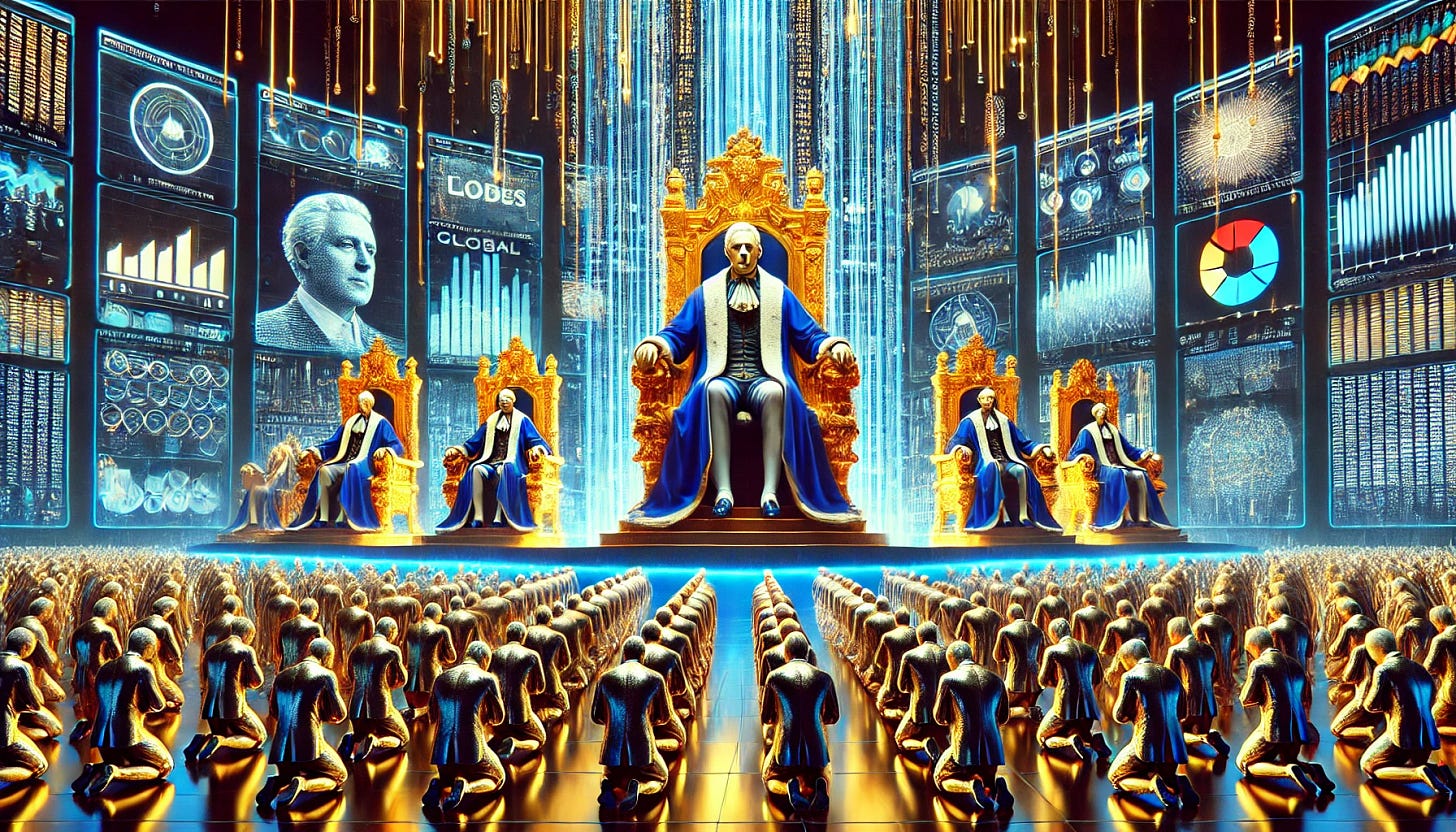

Remember that scene from Trump’s recent inauguration, with a line of tech billionaires behind him as he took the oath of office? Across from all the living Presidents were no longer Governors from across the country, but the most powerful lords of our digital space. Let's trace how this power builds through each stage of the Four-X Model in order to identify the new emerging colonial power.

First comes "Explore." Just as colonial powers once sent scouts to map unknown territories, digital platforms begin by mapping social connections and behavior patterns. Facebook's early days on college campuses offer a perfect example. They weren't just launching a service; they were mapping the social terrain of young adults, understanding how friendship networks form and evolve. Every "like," every friend request, every shared photo became data points in an emerging map of human social behavior.

The "Expand" phase follows, but with a crucial difference from historical colonialism. Instead of sending ships and soldiers, platforms expand through what they call "network effects" – the idea that a service becomes more valuable as more people use it. WhatsApp and YouTube don't need to force anyone to join; they create conditions where staying out becomes socially costly. Think about how difficult it is to maintain professional connections without LinkedIn, or to coordinate with family without WhatsApp. This isn't just convenience; it's a form of soft coercion.

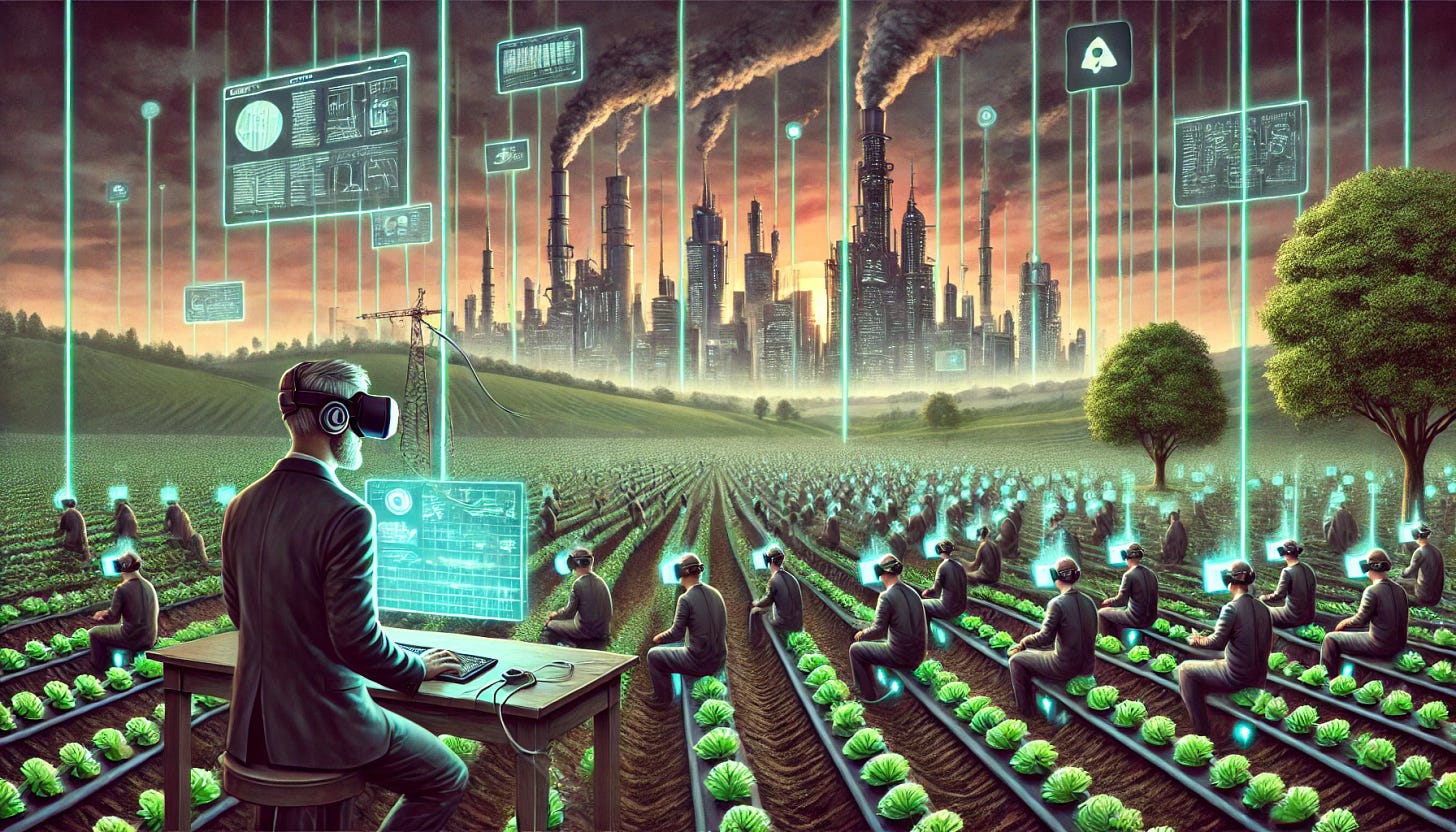

The "Exploit" phase reveals itself in the platforms' business models. Each time we scroll through our feeds, our attention, our emotions, our relationships are transformed into profitable data points. Consider how a simple birthday greeting to a friend – once a private moment of connection – now generates multiple data points about our social networks, our communication patterns, even our emotional responses. The colonial sugar plantations of the Caribbean extracted wealth from human labor; today's digital plantations extract wealth from human experience itself.

But it's the final X – "Exterminate" – that shows the true scope of digital colonialism's ambition. Just as colonial powers systematically eliminated alternative ways of living and knowing, today's tech platforms are steadily eliminating alternative ways of being social, of finding information, of maintaining relationships. When was the last time you remembered a friend's phone number? How often do you write a letter by hand? These aren't just changes in habit – they represent the systematic extinction of non-digital ways of being human.

The scale of this transformation becomes clear when we look at how completely platforms have colonized certain aspects of human life. Take dating, for instance. In just two decades, we've moved from a world where most people met their partners through social networks, community gatherings like church, or chance encounters to one where algorithms mediate our most intimate connections. Match Group, which owns Tinder, OkCupid, and other dating apps, now effectively controls access to potential partners for hundreds of millions of people. This isn't just a change in technology – it's a fundamental restructuring of how human relationships form.

The New Colonial Class: Silicon Valley's Digital Aristocracy

To understand who benefits from this new colonial system, we need to examine how power accumulates in the digital age. Just as the Dutch East India Company created a new merchant aristocracy that rivaled traditional nobility, today's tech platforms have given rise to a new ruling class that often exceeds the power of traditional political leaders.

Let's return to that scene at the inauguration, but look at it through a different lens. The tech leaders aren’t simply wealthy industrialists celebrating a new president. They represent something unprecedented in human history: private individuals who control the primary channels of human communication, connection, and commerce for billions of people. Their platforms don't just facilitate interaction – they shape how we understand reality itself.

Consider the level of control: A nineteenth-century British colonial officer could never know what every person in their territory was thinking, saying, or doing. But today's platforms track, analyze, and influence billions of human interactions every second. When Mark Zuckerberg makes a decision about Facebook's algorithm, it immediately affects how hundreds of millions of people connect with their friends and family. When Google adjusts its search rankings, it shapes how billions of people understand their world. This isn't just market power – it's a form of governance without accountability.

This new colonial class shares striking similarities with its historical predecessors. Just as colonial enterprises concentrated power in the hands of a small, predominantly white, male elite, today's tech industry shows similar patterns of exclusion. In 2023, less than 2% of venture capital funding went to Black founders. Women remain dramatically underrepresented in tech leadership. The colonial mindset of the past has been encoded into the very structure of our digital future.

But perhaps the most telling parallel lies in how this new colonial class views its own power. In 1899, Rudyard Kipling wrote of the "white man's burden" – the supposed moral duty of colonizers to "civilize" the colonized. Today, we hear echoes of this same patronizing ideology when tech leaders speak of "connecting the world" or "making the world more open and transparent." The language has changed, but the underlying assumption remains: that a small, privileged class has the right – even the duty – to reshape how billions of humans live and connect.

This reshaping occurs through what one can call "algorithmic governance" – the use of code and data to control human behavior at scale. Unlike traditional forms of power that rely on visible and external force, algorithmic governance works through subtle manipulation of our digital environment. When platforms adjust their recommendation algorithms to maximize "engagement," they're not just optimizing a technical system – they're implementing policies that shape human behavior as surely as any law or regulation.

The result is a new form of sovereignty that operates beyond traditional democratic controls. These digital aristocrats can influence elections, shape public discourse, and modify human behavior without any meaningful oversight or accountability. Their power extends beyond national borders, creating what scholars call "platform empires" that operate according to their own rules and serve their own interests.

The Erosion of Autonomy: When Algorithms Reshape Human Experience

To understand how deeply digital colonialism affects us, we need to examine what happens in those quiet moments when we're alone with our devices. Consider a simple morning ritual: you wake up and reach for your phone, intending to check the weather. Thirty minutes later, you find yourself still scrolling, having followed a trail of "recommended" content you never meant to see. This isn't a failure of willpower – it's the successful execution of algorithmic design.

These platforms shape our behavior through what tech companies euphemistically call "hypernudging." To grasp what this really means, imagine walking through a city where the streets mysteriously rearrange themselves based on your previous footsteps, subtly guiding you toward certain shops while making other paths harder to find. This is essentially what happens in our digital landscapes, where algorithms continuously reshape our environment based on our past behavior.

The transformation runs deeper than mere distraction. When medieval Christians gathered in cathedrals, the soaring architecture and stained glass were designed to lift their thoughts toward the divine. The shared narrative included an image of the good life that directed one to love their neighbor as oneself. Today's digital architectures are designed with a different purpose – to keep our attention firmly earthbound, focused on consuming content and generating data. The "infinite scroll" isn't just a design feature; it's a spiritual technology that trains us to value quantity over quality, novelty over depth, reaction over reflection.

Remember how we discussed the asymmetrical data relations in digital spaces? This asymmetry becomes most apparent in how it affects our decision-making. The platforms know not just what we've done, but what similar users have done, allowing them to predict and shape our choices before we make them. When Netflix suggests your next show or Amazon recommends your next purchase, they're not just guessing – they're using vast datasets of human behavior to guide your actions in ways that serve their interests.

This leads to what scholars call "algorithmic governance" – a system where our choices are increasingly shaped by invisible computational systems. Unlike traditional forms of control that operate through external force, algorithmic governance works by restructuring our environment in ways that make certain behaviors more likely than others. It's as if the world itself has been programmed to nudge us toward particular actions, thoughts, and feelings. The creators of this digital space have their own incentive structure and it is not the flourishing of ourselves and our community.

Consider how this affects something as fundamental as human relationships. When we share news of a promotion on LinkedIn, congratulations pour in – but how many of these responses come from genuine human impulse, and how many from the platform's prompts to "congratulate" our connections? The boundary between authentic human interaction and algorithmically induced behavior grows increasingly blurred.

What we're witnessing isn't just the commodification of attention – it's the colonization of human consciousness itself. Just as historical colonialism sought to "civilize" indigenous peoples by replacing their worldviews with European values, digital colonialism seeks to replace our natural social instincts with platform-mediated behaviors. The result is what can be call "digital dissociation" – a state where we're increasingly present online but absent from our embodied lives.

This erosion of autonomy is particularly evident in how platforms shape our understanding of the world. The algorithms that determine what news we see, what perspectives we encounter, and what information we consider credible are optimized not for truth or understanding, but for engagement. This creates what tech critics call "reality tunnels" – personalized versions of the world that can differ dramatically from person to person, making shared understanding increasingly difficult. There’s a reason so many of us think family and friends live in a different world - they do and it is a feature, not a bug in the system.

Resistance and Reimagination: Reclaiming Our Digital Commons

To understand how we might resist digital colonialism, we must first remember that the internet wasn't always a colonized space. Those early pioneers, many steeped in the revolutionary spirit of 1960s California, envisioned something radically different from what we have today: a decentralized space where information could flow freely, uncontrolled by any single authority.

This origin story matters because it reminds us that our current reality – of corporate platforms controlling and monetizing human connection – wasn't inevitable. It resulted from deliberate choices made over three decades, the combination of the commercialization of the internet, the rise of mobile devices that keep us constantly connected, and the emergence of social media platforms that mediate our relationships – the "triple revolution" mentioned above . Understanding this history helps us imagine different possibilities for our digital future.

The transformation from public commons to corporate territory happened gradually, much like the historical enclosure of physical commons. Just as medieval peasants once had shared spaces for grazing animals and gathering together, the early internet provided shared spaces for communication and collaboration. But just as those physical commons were eventually fenced off by private interests, our digital commons has been steadily enclosed by corporate platforms, transforming what was once free and open into controlled, revenue-generating territories.

Consider what happened when Mark Zuckerberg appeared before Congress in 2023 to discuss the impact of social media on young people. The senators struggled to even articulate the nature of the problem, much less propose solutions. This moment revealed something crucial about our current predicament: our traditional democratic institutions haven't yet developed the tools to govern these new forms of power. We're trying to regulate digital empires with laws designed for an analog world.

But resistance to digital colonialism is already emerging, often in unexpected places. In Barcelona, citizens have created a "digital sovereignty" initiative, building public platforms for civic engagement that aren't controlled by corporate interests. In India, farmers have developed their own communication networks to organize and share agricultural knowledge, bypassing corporate platforms. Indigenous communities worldwide are creating their own data governance systems, ensuring their cultural knowledge isn't exploited by colonial algorithms.

These aren't just isolated projects – they're seeds of digital resistance movements. Just as historical anti-colonial movements developed new forms of solidarity and resistance, these initiatives are creating new models for digital autonomy and community control. They show us that another kind of digital world is possible, one that serves human flourishing rather than corporate profit.

The path forward requires us to reimagine not just our technology, but our relationship with it. This means developing what I call "digital wisdom practices":

First, we must reclaim our attention through regular rituals of disconnection. Just as traditional communities maintained sacred spaces free from commerce, we need to create digital sanctuaries where human connection isn't mediated by algorithms or monitored for profit.

Second, we need to build local digital commons – platforms and networks owned and governed by communities rather than corporations. This isn't about rejecting technology, but about ensuring it serves the needs of human communities rather than distant shareholders.

Third, we must develop new forms of digital literacy that go beyond just teaching people how to use technology. We need to understand how digital systems shape our perception, influence our behavior, and affect our relationships. This means teaching our children to be not just "digital natives" but "digital citizens" capable of critically engaging with and shaping their digital environment.

The Human Imperative: Choosing Our Digital Future

As we stand at this crossroads of human history, the stakes of our digital colonization become increasingly clear. We face a choice similar to that faced by communities under historical colonialism: Do we accept the colonial system as inevitable, or do we dare to imagine and create alternatives? The answer, I believe, lies not in wholesale rejection of digital technology, but in reclaiming it for human purposes.

Consider again that scene in Trump’s inauguration. That moment captured something essential about our current predicament: the merger of digital and traditional power structures into something unprecedented in human history. But it also revealed something else – the vulnerability of even the most powerful systems to public scrutiny and collective action. When that image made headlines, it sparked crucial conversations about the nature of power in our digital age.

The challenge we face isn't simply technical or political – it's fundamentally about what it means to be human in an age of algorithmic governance. When platforms reduce our complex social lives to data points, when algorithms shape our perceptions and choices, when our most intimate moments become resources for extraction, we lose something essential to human flourishing: our capacity for genuine autonomy and authentic connection.

By understanding how we got here, seeing the choices that built and colonized the digital space, we can begin to have hope for another world. Its transformation into a system of digital colonies wasn't inevitable and this means different choices could lead to different outcomes.

We're already seeing glimpses of alternative futures emerging. When Barcelona's citizens build public digital platforms, when Indian farmers create independent communication networks, when indigenous communities develop their own data governance systems, they're not just resisting digital colonialism – they're imagining new ways of being digital that honor human dignity and community autonomy.

The path forward requires us to develop digital wisdom – a way of engaging with technology that preserves our essential humanity while benefiting from digital tools. This means creating rituals and practices that help us maintain our autonomy while participating in digital life. It means building platforms and networks that serve human flourishing rather than corporate profit. Most importantly, it means remembering that we are not passive subjects in this new colonial regime, but active agents capable of shaping its future.

As we confront this moment of unprecedented technological transformation, solidarity becomes an act of radical reimagination – a collective effort to preserve our full, irreducible human potential. The colonizers have changed, but the fundamental dynamics of extraction and control remain disturbingly familiar. The digital frontier is the new battleground for human rights, privacy, and collective autonomy.

We are facing a paradigm shift requiring a new approach to social theory and ethics. The first step is understanding the system – recognizing that our digital lives are far from neutral territory. But understanding alone isn't enough. We must act, both individually and collectively, to reclaim our digital commons and create technologies that serve human flourishing rather than corporate profit.

The struggle against digital colonialism is, at its heart, a struggle for the human soul in the digital age. It calls us to reimagine not just how we use technology, but who we are as human beings. In this great task, we might find wisdom in the words of those who resisted historical colonialism: the power to shape the future lies not in the tools themselves, but in our collective capacity to imagine and create more human ways of being digital.

More Resources

The Tech Takeover: Reimagining Connection in a Digital World with Tripp Fuller and Bo Sanders (video)

Data Grab: The New Colonialism of Big Tech and How to Fight Back by Ulises A. Mejias & Nick Couldry

The Space of the World: Can Human Solidarity Survive Social Media and What If It Can't? By Nick Couldry

AI and the Tragedy of the Commons: A Decolonial Perspective with Ulises A. Mejias (video)

The Corporatization of Social Space by Nick Couldry (video)

A Five-Week Online Lenten Class

Join Dr. John Dominic Crossan for a transformative 5-week Lenten journey on “Paul the Pharisee: Faith and Politics in a Divided World.” This course examines the Apostle Paul as a Pharisee deeply engaged with the turbulent political and religious landscape of his time.

Through the lens of his letters and historical context, we will explore Paul’s understanding of Jesus’ Life-Vision, his interpretation of the Execution-and-Resurrection, and their implications for nonviolence and faithful resistance against empire. Each week, we will delve into a specific aspect of Paul’s theology and legacy, reflecting on its relevance for our own age of autocracy and political turmoil.

ASYNCHRONOUS CLASS: You can participate fully without being present at any specific time. Lectures and livestream replays are available on the Class Resource Page.'

CHURCH GROUPS: You are welcome to use this class for your Sunday School class or small group! More details available via the button below.

COST - PAY WHAT YOU WANT: A course like this is typically offered for $250 or more, but we invite you to contribute whatever you can to help make this possible for everyone (including $0)!

Tripp - this is amazing and frightening at the same time. I look forward to next steps to a practice of becoming human in a digital space. Thank you.

Candy Adams

Thanks, Tripp. Your analysis of this new era encourages me and tweaks my interested. For the first time I’m sensing possible “grounding” in what to me has been “ethereal”. More! More!